Application to Probability of 'go'

Kevin Kunzmann

Source:vignettes/web_only/probability-of-go.Rmd

probability-of-go.Rmd

library(oncomsm)

library(ggplot2)

library(dplyr, warn.conflicts = FALSE)

library(tidyr)

library(future) # parallel processing

library(doFuture)

#> Loading required package: foreach

library(doRNG) # parallel safe random number generation

#> Loading required package: rngtools

registerDoFuture()

plan(multisession) # instruct future how to run in parallel

set.seed(42L)tl;dr: A multi-state model allows sampling individual-level data. This can be used to impute future trial data from the prior-predictive distribution at the planning stage. The generated trial data can then be used to evaluate quantitative ‘go’ decision criteria to determine whether the sampled data would lead to a ‘go’ decision. The average sampled ‘go’ rate over multiple such data sets is the a MCMC approximation of the probability of ‘go’. A similar approach can be taken at any interim time point by sampling from the posterior predictive distribution conditioning on any observed data. This then leads to an updated (or conditional) probability of ‘go’.

For a general introduction to the multi-state approach used throughout this package, see the vignette Multi-state Models for Early Oncology.

Decision criteria

Let \(D_t \in \mathbb{D}\) be the observed (visit) data at time point \(t\) after the start of the trial. Let \(\tau\) be the stopping time of the trial, e.g., the time point when all \(n\) individuals have been recruited and have reached their minimal follow-up. The final decision on ‘go’ can then be modeled as a function \(\phi: \mathbb{D} \to \{0,1\}\) with

\[\phi(D_\tau)=1 :\Leftrightarrow D_\tau\ \ \text{leads to 'go'}\ . \]

Examples for such decision rules could be

- a quantile of the posterior distribution of the response rate being above a certain relevance threshold,

- a quantile of the posterior distribution of the PFS6 rate being above a certain relevance threshold,

- a quantile of the posterior distribution of PFS being above a certain threshold,

- or a combination of the above.

Probability of ‘go’

Let \(\theta\) be a vector of parameters for a generative model that allows to sample data \(D_\tau|\theta\). Probability of ‘go’ can the be calculated as expected value of the decision rule under a prior distribution (density) \(f(\theta)\) over the parameter space: \[ \operatorname{Pr}\big[\,go\,\big] = \int \phi(D_\tau\,|\,\theta) \cdot f(\theta) \operatorname{d}\theta \ . \] In practice, this integral can be approximated by sampling from the generative model and calculating the average ‘go’ rate.

If data \(D_t=d_t\) is observed, the probability of ‘go’ can be updated using Bayes Theorem \[ \operatorname{Pr}\big[\,go\,|\, D_t=d_t \,\big] = \int \phi(D_\tau\,|\,D_t=d_t,\theta) \cdot f(\theta\,|\,D_t=d_t) \operatorname{d}\theta \] where \(f(\theta\,|\,D_t=d_t)\) is the posterior density given the data \(d_t\) observed up to time \(t\).

Example

mdl <- create_srpmodel(

A = define_srp_prior(

p_mean = 0.5, p_n = 3, p_eta = 0.2,

recruitment_rate = 2

)

)

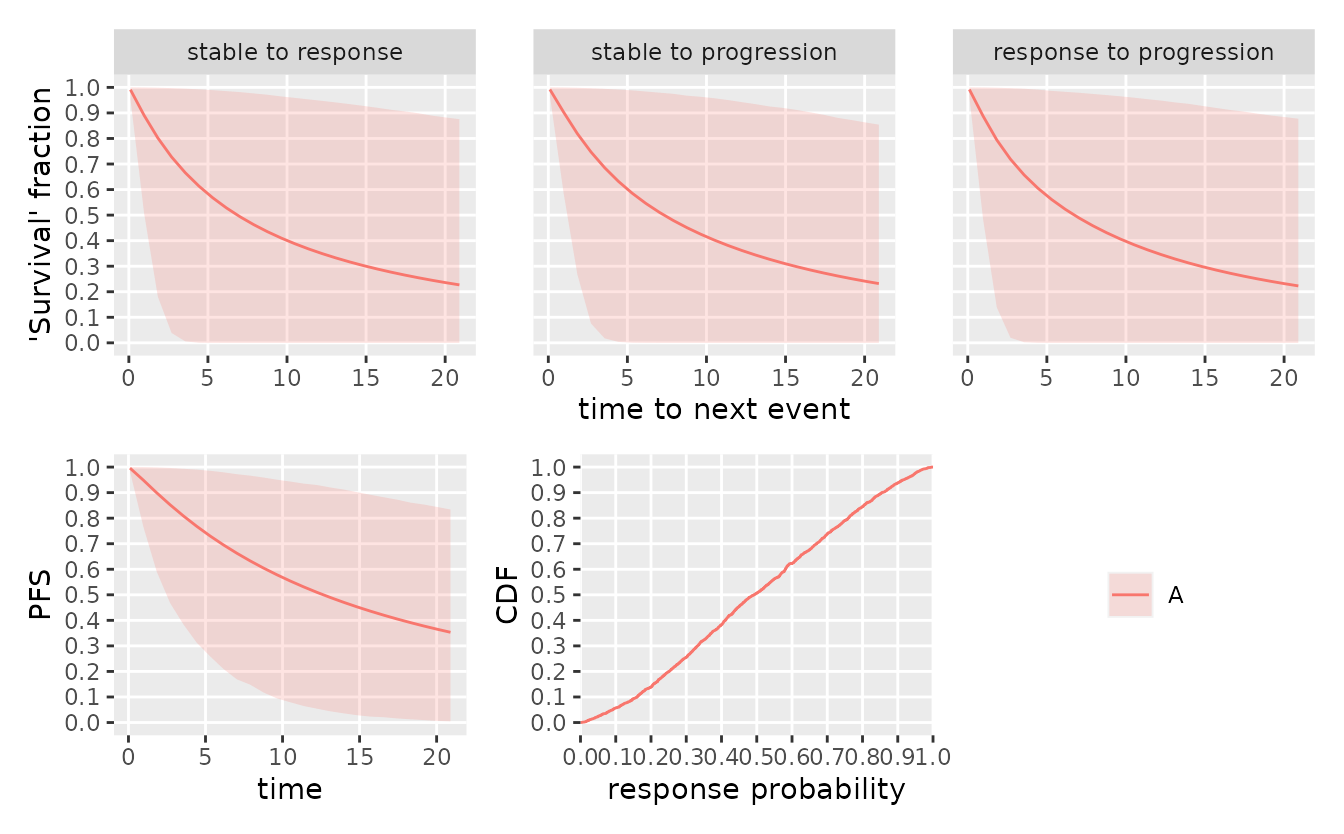

smpl_prior <- sample_prior(mdl, seed = 6835L)

plot(mdl, parameter_sample = smpl_prior, confidence = 0.9)

ORR and PFS ‘go’ criterion

Assume that a ‘go’ is defined as a combination of a sufficiently high response rate and a sufficiently high progression-free-survival rate at 12 months:

\[\phi(D_\tau) := \begin{cases} 1 \quad \text{if} \quad \operatorname{Pr}\big[\,\text{ORR} \geq 0.3\ \&\ \text{PFS}_{t=12} \geq 0.4 \, | \, D_\tau\,\big] \geq 0.8 \\ 0 \quad \text{else.} \end{cases}\]

This decision criterion can be implemented as the following function:

go <- function(model, data, nsim = 200L) {

set.seed(3819308) # fix the seed for reproducibility

smpl <- suppressWarnings( # low sample size leads to stan warnings on ESS

# can be ignored, errors average out

# can also be avoided by increasing nsim

sample_posterior(model, data = data, warmup = 200L, nsim = nsim)

)

tbl_pfs_orr <- inner_join(

compute_pfs(model, 12, smpl), # PFS 12

parameter_sample_to_tibble(model, smpl) %>% # ORR

filter(parameter == "p") %>%

transmute(iter = 1:nsim, group_id, orr = value),

by = c("iter", "group_id")

)

res <- tbl_pfs_orr %>% # apply decision criterion

group_by(group_id) %>%

summarize(

go = mean(pfs >= 0.5 & orr >= 0.3) >= 0.8

)

return(res)

}The criterion can be tested by sampling from the model under fixed, very favorable parameters - here we clearly expect a ‘go’.

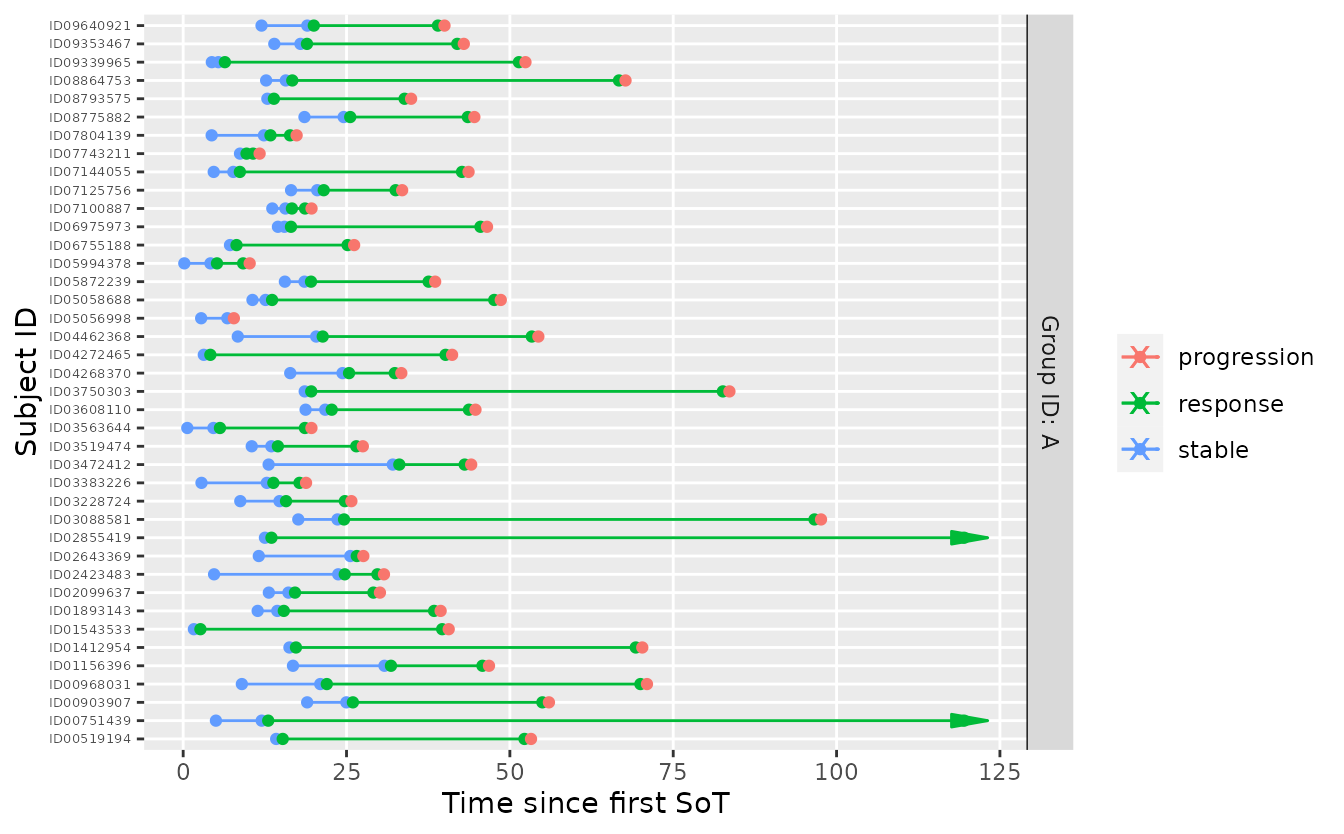

tbl_prior_predictive <- sample_predictive(

mdl,

n_per_group = 40L,

sample = smpl_prior,

p = 1,

shape = matrix(c(1, 1, 1), ncol = 3),

scale = matrix(c(4, 2, 24), ncol = 3) / log(2),

nsim = 1L,

seed = 34930L

)

tbl_prior_predictive %>%

visits_to_mstate(mdl) %>%

plot_mstate(mdl, relative_to_sot = FALSE)

go(mdl, tbl_prior_predictive)

#> # A tibble: 1 × 2

#> group_id go

#> <chr> <lgl>

#> 1 A TRUECalculating ‘go’ probability

Next, we can create a table of multiple prior-predictive samples.

Grouping them by iteration and nesting the data frames results in a data

frame of data frames, where tbl_prior_predictive$data[[i]]

corresponds to the data in the \(i\)-th

resample.

tbl_prior_predictive <- sample_predictive(

mdl,

n_per_group = 40L,

sample = smpl_prior,

p = 0.6,

shape = matrix(c(1, 1, 1), ncol = 3),

scale = matrix(c(3, 12, 24), ncol = 3) / log(2),

nsim = 100L,

seed = 34930L

) %>%

group_by(iter) %>%

tidyr::nest() %>%

ungroup()

print(tbl_prior_predictive)

#> # A tibble: 100 × 2

#> iter data

#> <int> <list>

#> 1 1 <tibble [1,600 × 4]>

#> 2 2 <tibble [1,686 × 4]>

#> 3 3 <tibble [1,083 × 4]>

#> 4 4 <tibble [1,205 × 4]>

#> 5 5 <tibble [911 × 4]>

#> 6 6 <tibble [1,104 × 4]>

#> 7 7 <tibble [1,200 × 4]>

#> 8 8 <tibble [1,441 × 4]>

#> 9 9 <tibble [1,518 × 4]>

#> 10 10 <tibble [1,059 × 4]>

#> # ℹ 90 more rowsApplying the decision criterion to each of them and averaging over all iterations is an MCMC approximation of the probability of ‘go’.

# compute results in parallel

res <- foreach(i = seq_len(nrow(tbl_prior_predictive))) %dorng% {

go(mdl, tbl_prior_predictive$data[[i]])

}

# bind results together and aggregate to probability of 'go'

tbl_pr_go <- bind_rows(res, .id = "iter") %>%

group_by(group_id) %>%

summarize(

`Pr[go]` = mean(go),

se = sd(go) / sqrt(n())

)

print(tbl_pr_go)

#> # A tibble: 1 × 3

#> group_id `Pr[go]` se

#> <chr> <dbl> <dbl>

#> 1 A 0.99 0.01The same functionality is available is the function

oncomsm::simulate_decision_rule.

Probability of ‘go’ during a trial

The Bayesian generative model allows calculating the posterior distribution at any time point given the data observed up to that point. The future course of the trial can then be sampled from the posterior predictive distribution.

To illustrate the shift in ‘go’ probability over time, a single trial realization from a set of fixed response probabilities that conflict with the prior mean is sampled as hypothetical data. As data accrues, the evidence will lead to a shift in the posterior probability and thus the probability of ‘go’.

We just take the first sampled trial as ground truth.

tbl_data <- tbl_prior_predictive$data[[1]]Next, interim views of the full data set at a sequence of time points can be created. This data subsets correspond to the data available at potential interim analyses.

tbl_data_interims <- tibble(

t_interim = c(1, 3, 6, 9, 12, 18, 24) # interim time point (months)

) %>%

mutate(

data = purrr::map(t_interim, ~filter(tbl_data, t <= .))

)

print(tbl_data_interims)

#> # A tibble: 7 × 2

#> t_interim data

#> <dbl> <list>

#> 1 1 <tibble [2 × 4]>

#> 2 3 <tibble [10 × 4]>

#> 3 6 <tibble [37 × 4]>

#> 4 9 <tibble [76 × 4]>

#> 5 12 <tibble [131 × 4]>

#> 6 18 <tibble [311 × 4]>

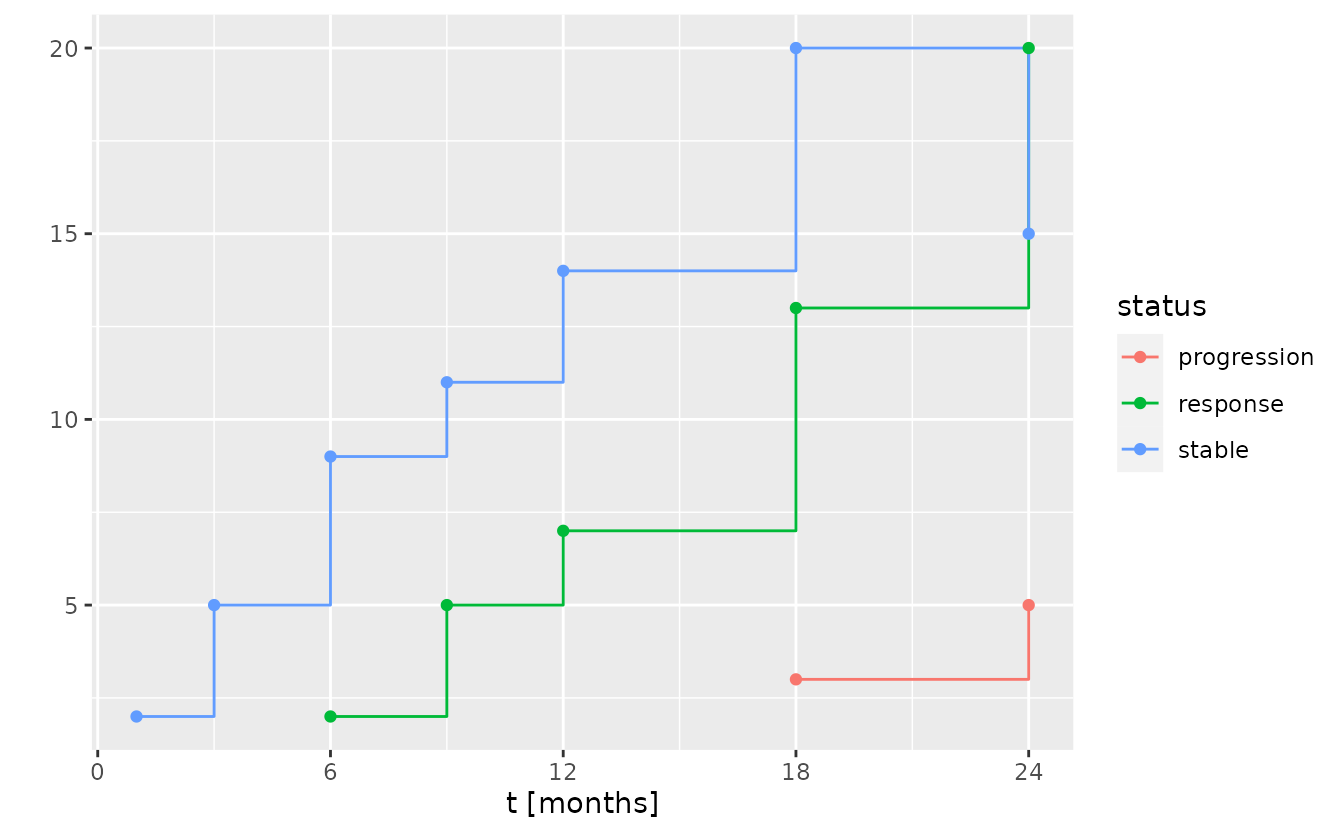

#> 7 24 <tibble [527 × 4]>This can be compared to the number of available responses, progressions, and stable individuals at each time point.

f <- function(tbl) {

tbl %>%

group_by(group_id, subject_id) %>%

summarize(

status = {

if (all(state == "stable")) {

"stable"

} else {

state[which(state != "stable")[1]]

}

},

.groups = "drop_last"

) %>%

group_by(group_id, status) %>%

summarize(n = n(), .groups = "drop")

}

tibble(

t_interim = tbl_data_interims$t_interim,

summary = purrr::map(tbl_data_interims$data, f)

) %>%

unnest(summary) %>%

ggplot() +

aes(t_interim, n, color = status) +

geom_step() +

geom_point() +

scale_x_continuous("t [months]", breaks = seq(0, 36, by = 6)) +

scale_y_continuous("", breaks = seq(0, 30, by = 5))

For each such interim data set, the remainder of the trial can be imputed by sampling form the posterior-predictive distribution given the data observed up to this point. The probability of ‘go’ is then the average ‘go’ rate in the posterior predictive sample when applying the decision criterion.

First, the calculation of the probability to ‘go’ is wrapped in a

function that accepts a dataset (tbl) and the number of

resamples (nsim).

This wrapper around oncomsm::simulate_decision_rule

pr_go <- function(tbl, model, nsim = 100L) {

tbl_pr_go <- simulate_decision_rule(mdl, 40, go, data = tbl,

nsim = nsim, seed = 27307) %>%

group_by(group_id) %>%

summarize(

`Pr[go]` = mean(go),

se = sd(go) / sqrt(n())

)

return(tbl_pr_go)

}Next, this function is mapped over all interim time points with the respective interim data. The number of simulations is the main factor in determining the runtime of the program besides the number of samples drawn from the posterior distribution to evaluate the decision criterion.

tbl_pr_go_over_time <- tbl_data_interims %>%

mutate(

res = purrr::map(data, pr_go, nsim = 33L)

) %>%

tidyr::unnest(res)

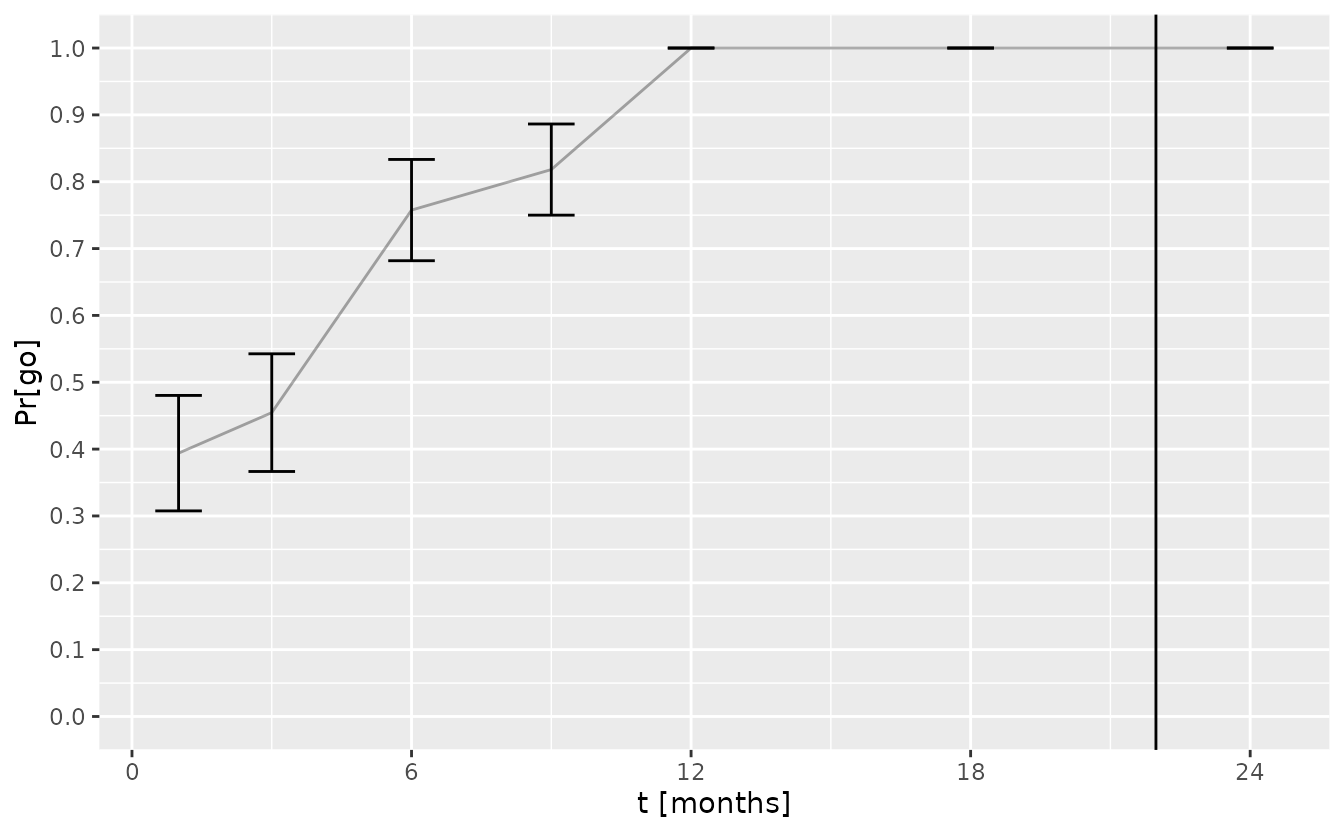

tbl_pr_go_over_time

#> # A tibble: 7 × 5

#> t_interim data group_id `Pr[go]` se

#> <dbl> <list> <chr> <dbl> <dbl>

#> 1 1 <tibble [2 × 4]> A 0.394 0.0864

#> 2 3 <tibble [10 × 4]> A 0.455 0.0880

#> 3 6 <tibble [37 × 4]> A 0.758 0.0758

#> 4 9 <tibble [76 × 4]> A 0.818 0.0682

#> 5 12 <tibble [131 × 4]> A 1 0

#> 6 18 <tibble [311 × 4]> A 1 0

#> 7 24 <tibble [527 × 4]> A 1 0The change of the ‘go’ probability over time is shown below with error bars corresponding to the standard error of the simulation.

t_last_patient_follow_up <- tbl_data %>%

visits_to_mstate(mdl) %>%

pull(t_sot) %>%

max() + 3 # assuming minimal follow-up of three months

ggplot(tbl_pr_go_over_time) +

aes(t_interim) +

geom_errorbar(aes(ymin = `Pr[go]` - se, ymax = `Pr[go]` + se), width = 1) +

geom_line(aes(y = `Pr[go]`), alpha = 0.33) +

geom_vline(xintercept = t_last_patient_follow_up) +

scale_x_continuous("t [months]", breaks = seq(0, 36, by = 6)) +

scale_y_continuous(breaks = seq(0, 1, by = 0.1), limits = c(0, 1))